In the era of multiple camera setups, syncing multiple camera sensors are becoming more and more important. If you want to create a multiple camera setup, with respect to problem requirements, the need for spatio-temporal alignment of the cameras arises. Spatio alignment is solved by calibrating the camera in terms of extrinsic and stereo calibration, it is require to understand the correspondence from 2D image plane to 3D world coordinates. For the temporal alignment we need to sync timestamps of the cameras in order to make sure each frame from cameras is aligned in time.

Online Hardware Solution for Temporal Alignment

Currently, there are hardware solutions that are used as global trigger for each and every camera. For commercial usage, there are hardwares that can trigger up to 6 cameras. For each 6 camera pairs you have to use one global trigger, in order to sync cameras. Syncing more than 6 cameras yields a solution where you need to also sync the trigger hardwares. By so, number of cameras required number of hardware is also drastically increases, simply 1 to 6.

Offline Alignment

As you can guess, we can leverage the audio-visual features of the video in order to sync them offline. But since the visual features are continuous and all features are not assured to appear on all videos at the same time it is hard to find time offset in between frames.

Considering the audio of the video, all audio input sensors aka micro phones, are recording the same scene. So they are inherently have the same audio-features with a time shift/offset. By leveraging this property, we can sync the videos. First we should look at an useful signal processing technique: cross-correlation.

According the wikipedia cross-correlation in signal processing is a measure of similarity of two series as a function of the displacement of one relative to the other. This is also known as a sliding dot product or sliding inner product. It is commonly used for searching a long signal for a shorter, known feature. The cross-correlation is similar in nature to the convolution of two functions. In an autocorrelation, which is the cross-correlation of a signal

with itself, there will always be a peak at a lag of zero, and its size will be the signal energy.

In short, we can determine a reference audio and search similar signal properties along with other videos’ audios. To do so we will use a 3rd party tool which is praat.

Cross-correlating reference audio with other audio is a common method for synchronization of videos. In order to do so, here is the recipe:

Extract the audio with ffmpeg like tools.

Choose a reference audio.

Cross-correlate each audio with reference audio.

Determine the offset in between videos with respect to reference.

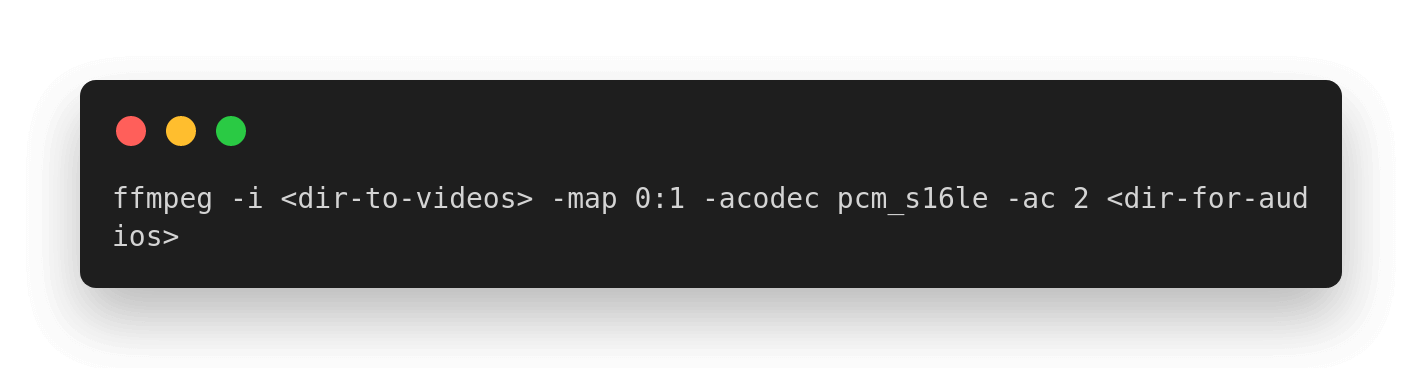

Extract The Audio We will be using ffmpeg in order the extract the audio of the video. You can use the following bash comment to do so:

Choose The Audio Choosing the last started video recording is a wise selection since the reference audio should be included at each video. Let’s assume reference audio is saved as ref.wav.

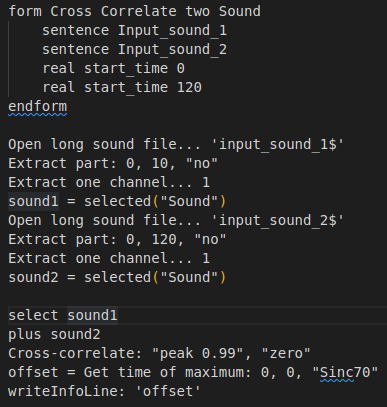

Cross-correlate Audio Signals As stated above we will be using praat for cross-correlation. You can save the following script as ”crosscorrelate.praat” to the working directory:

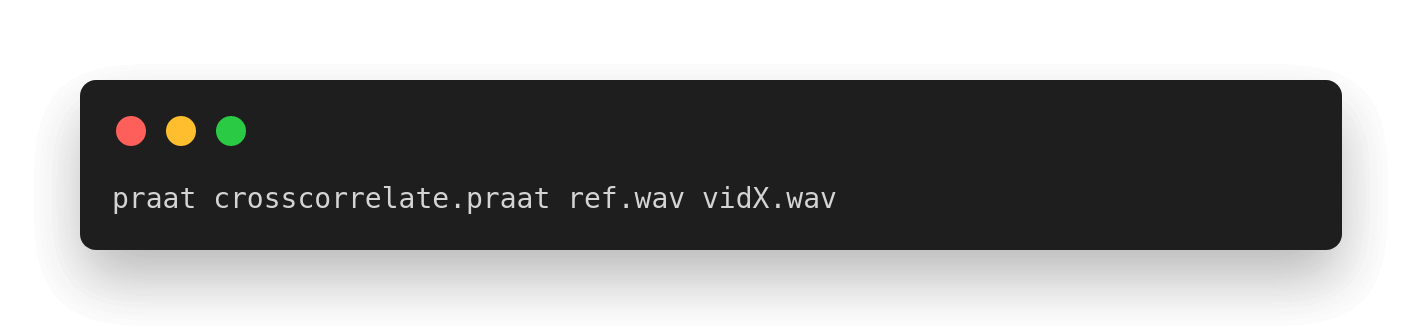

With this script we will manage to correlate with just a bash command.

Determine The Offset With the following bash command you will be able to print out the offset value of the audio with respect to the reference audio.

Conclusion

By the help of this method, you clearly find the time offset of each video with respect to a reference video without using any hardware solution. Of Course there some drawbacks of the solution since it’s syncing the video with respect to audio. For instance, you have a recording with 25 fps and want to sync your videos. 25 fps corresponds to a 40ms per frame. Definitely you will have a jitter in frames. It’s also wise to check frames after the offset.